Machine Learning (ML) and Artificial Intelligence (AI) have become indispensable tools in today's technology-driven world. They power everything from recommendation systems to autonomous vehicles.

Central to the rapid advancements in ML and AI is the use of specialized hardware, and NVIDIA's advanced Graphics Processing Units (GPUs) are at the forefront of this revolution.

We'll explore how to achieve unprecedented machine learning acceleration with NVIDIA's advanced GPUs. We'll cover the key concepts, practical steps, and benefits for both beginners and experienced practitioners in the field.

Understanding NVIDIA's Advanced GPUs

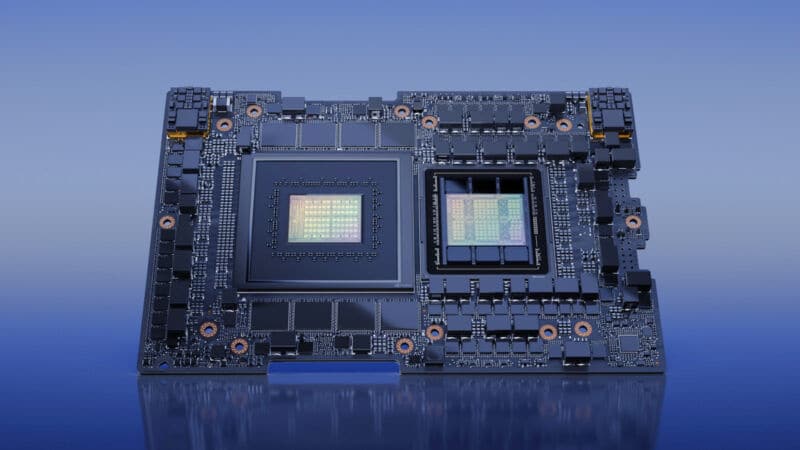

NVIDIA, a global leader in visual computing technologies, has developed a range of advanced GPUs specifically designed for machine learning workloads.

These GPUs are part of the NVIDIA Tesla series, including the A100 and the latest A40, and are optimized for data center environments. Let's dive into what makes these GPUs stand out.

Tensor Cores for Enhanced Performance

NVIDIA's advanced GPUs have Tensor Cores, specialized hardware units designed to accelerate matrix multiplication and deep learning operations.

These Tensor Cores dramatically increase the processing speed of deep learning models, making them essential for accelerating machine learning workloads.

High Memory Capacity and Bandwidth

Machine learning often requires large datasets and models that demand significant memory and bandwidth. NVIDIA's advanced GPUs have high memory capacities and ultra-fast memory bandwidth, allowing you to work with large datasets and complex models without performance bottlenecks.

Multi-GPU Scalability

To achieve unprecedented acceleration, Gcore AI GPU cloud operations can be used in multi-GPU configurations. This allows you to distribute workloads and train larger models faster. Scalability is a crucial feature for tackling complex machine-learning tasks.

Getting Started with NVIDIA's Advanced GPUs

Now that we understand the benefits of NVIDIA's advanced GPUs let's discuss how to get started and achieve remarkable machine learning acceleration.

Selecting the Right GPU

The first step is to choose the proper NVIDIA GPU for your specific machine-learning tasks. Consider factors like budget, power requirements, and the level of performance needed.

The NVIDIA A100 and A40 are excellent choices for various applications, but your decision should align with your project's requirements.

GPU Installation

Once you've chosen the GPU, install it on your machine. If you're using a data center setup, consult your IT department for proper installation. Ensure that you have the necessary drivers and software to support your GPU.

Framework and Software

NVIDIA GPUs are compatible with popular machine learning frameworks like TensorFlow, PyTorch, and CUDA. Install the required software and libraries to create and run your machine-learning models seamlessly.

Optimize for Deep Learning

To harness the full potential of NVIDIA's GPUs, you need to optimize your machine-learning code for deep-learning operations. Utilize GPU-accelerated libraries and APIs to leverage the hardware capabilities.

Scaling with Multi-GPU

If your tasks are particularly resource-intensive, consider a multi-GPU setup. Multi-GPU configurations can significantly accelerate training times for complex deep-learning models.

Benefits of Leveraging NVIDIA's GPUs

Now that you've set up your NVIDIA GPU for machine learning let's explore the incredible benefits you can expect.

Unparalleled Performance

When achieving top-tier performance in machine learning, NVIDIA's advanced GPUs stand head and shoulders above the competition.

These GPUs deliver an unmatched level of computational power that significantly enhances the entire machine-learning process.

With these GPUs at your disposal, you can anticipate not only faster model training but also quicker inference times.

Additionally, their remarkable performance allows you to handle more extensive and complex datasets with ease, opening the door to a new era of machine-learning capabilities.

Cost-Efficiency

While it's true that investing in NVIDIA's advanced GPUs represents a significant upfront cost, the dividends they pay in terms of cost-efficiency over time are nothing short of remarkable.

Thanks to the accelerated performance they provide, your model development and deployment processes will be expedited, reducing time-to-market for your projects.

This, in turn, translates into substantial cost savings, as the sooner your models are deployed, the sooner they can start generating value. In the grand scheme of your project's economics, this cost-efficiency becomes a game-changer.

Unmatched Flexibility and Versatility

One of the most outstanding features of NVIDIA's advanced GPUs is their unmatched flexibility and versatility.

These GPUs are like a Swiss Army knife for machine learning tasks, capable of handling various applications, from image recognition to natural language processing.

Whether you are engaged in research, development, or production environments, these GPUs are invaluable.

Their adaptability across various domains within the machine learning landscape makes them a versatile asset for professionals and organizations alike, ensuring that they are always prepared to tackle the most demanding challenges.

Conclusion

NVIDIA's advanced GPUs are a game-changer in machine learning and artificial intelligence.

Whether you're just starting your machine learning journey or are an experienced practitioner, NVIDIA's GPUs are the key to unlocking your full potential in AI and ML.