No self-respecting website owner would ever put plagiarised content on their website. Yet, duplicate content is a common SEO challenge that many webmasters struggle to understand and overcome. Improving the amount of organic traffic coming to your website involves a lot of things.

Even if you have taken steps to speed up WordPress website, perfected the on-page experience, and are actively building links to your most important pages, duplicate content can seriously negatively influence the quality of the results of your SEO efforts.

Doing SEO sometimes involves copying the strategies of your competitors. For instance, you may use a backlink checker to find out more about the backlink building strategy of your competitors to guide your own strategy. However, you cannot do the same with content.

But you probably already knew that. However, even when you have not copied content from another website, your website can face duplicate content issues.

Sounds confusing?

Let’s look at the definition of duplicate content in a little more detail to sort this confusion out.

Duplicate Content: The definition

Here’s Google’s definition of duplicate content:

“Duplicate content generally refers to substantive blocks of content within or across domains that either completely match other content or are appreciably similar.”

The first thing that becomes clear is that instances of duplicate content are not just those that happen across different domains. In other words, even if two pages on your own website have “appreciably similar” content, Google and other search engines will treat it as an instance of content duplicacy.

With that said, the same website can have multiple pages with similar or even the same content simply because it makes sense. For instance, if an ecommerce store selling t-shirts has created separate pages for different sizes of the same t-shirt, it really doesn’t make sense for them to write different, unique product descriptions for each page.

In the above example, the owner of the ecommerce website had no malicious intent while “duplicating” their own content but the search engine algorithms don’t understand that. Fortunately, search engine companies that own these algorithms understand that all instances of content duplicacy are not backed by a deceptive or malicious intent.

Since there’s no malicious intent, duplicate content should not be a problem from an SEO perspective. However, that is not the case. While the presence of duplicate content on a website may not result in a Google penalty, it can still negatively influence the search engine performance of a website.

Duplicate Content: Why It Is A Problem

To put it simply, duplicate content affects SEO performance because it is problematic from the point of view of a search engine algorithm. For search engines, duplicate content can cause three major issues:

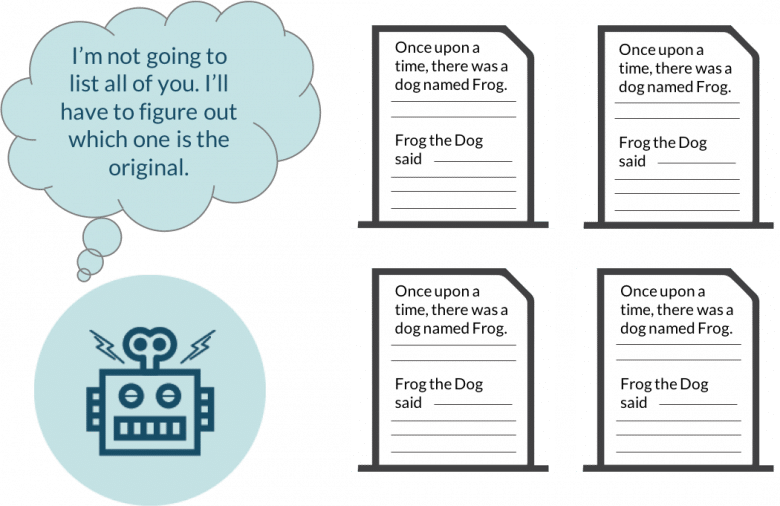

- When a search engine algorithm comes across two pages with similar or same content, it has a hard time in deciding which version of the page should be included in its indices.

- Similarly, the search engine algorithm also has a hard time in deciding which page’s link metrics (page authority, trust score, link equity etc) should be taken into account while deciding where to rank the page.

- It also makes it difficult for the search engine algorithm to decide which of the two versions should be ranked in the search engine result pages (SERPs) for the relevant keywords.

- When search engines face problems and get confused, website owners that depend on organic traffic are the first ones that suffer. This suffering usually comes in the form of loss of organic traffic and a drop in rankings. These problems usually occur because of the following two issues:

- Since search engines do not show multiple versions of the same content in their search results, they often choose the one version that they think is the best match for a particular search query. As a result, the visibility of each of the pages that contain duplicate content is negatively affected.

- The second issue occurs when other websites want to link to your website. Different website owners may come across different versions of the same content present on different URLs and link to them. As a result, the “link juice” is diluted between the different versions. Inbound links are an important ranking factor and the dilution of link juice can directly affect rankings.

- Now that we know why duplicate content is a problem for both search engines and website owners, let’s look at why they happen.

Duplicate Content Issues: Causes

As mentioned earlier, most website owners don’t intentionally publish duplicate content on their websites. Yet, there’s a lot of duplicate content present on the web. A study conducted in 2015 revealed that 29% of the web is filled with duplicate content!

In this section we will explore the causes that lead website owners to unintentionally publish duplicate content on their websites. Let’s begin:

1. Pagination

To put it simply, pagination is the term used to refer to the instances where the same content spans over multiple pages. For instance, if you have a directory of all the real estate developers in California, fitting all the listings will not make sense from a user experience point of view. You will have to break the list down into different pages. However, most of the pages will be dedicated to the same “topic” and thus, the search engine may consider these pages to be duplicates of each other.

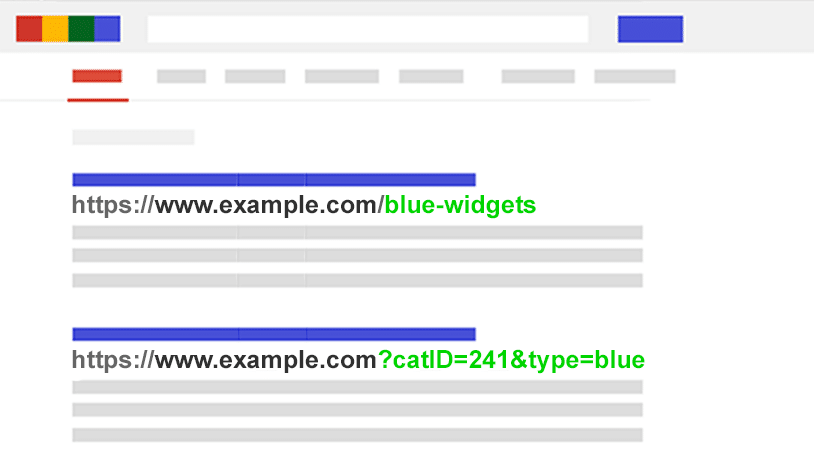

2. URL Variations

If you are using different URL parameters on your website, such as click tracking and analytics codes, these URLs may be perceived as unique pages by the search engine. Different URLs containing different parameters may be pointing to the same page but the search engine will treat each URL as a separate page.

This is even true in cases where two URLs have the same parameters but they appear in different order in both of them.

Similarly, if your website is set up to assign a different session ID to every user, you may be unintentionally creating duplicate pages. For instance, for the URL www.yourwebsite.com/colourcategory, both www.yourwebsite.com/colourcategory-product?colour and www.yourwebsite.com/colourcategory?SESSID=33 are duplicate pages.

The same issue can be seen in websites that have separate printer friendly versions of their web pages.

3. Copied Content On Product Variations

As mentioned earlier, ecommerce websites are the most common victims of content duplicacy. This is because different versions of the same products are often shown on unique pages but the product description of the different version is either “appreciably similar” or exactly the same.

This may happen on websites that feature products in different sizes or colours or both.

This may also happen in websites that sell products manufactured by a different company and use the description that is used or given by the manufacturer. Such cases of duplication are especially dangerous from an SEO point of view because a number of different websites may be using the same description shared by the manufacturer.

This means, the duplicacy in this case is across different domains.

4. Different Versions Of The Same Website

If your website has a version without the “www” prefix and one with the “www” prefix, the search engine will consider both versions as different websites. This means, the search engine will assume that one of the versions has content that is copied from the other.

The same may happen if your website has different versions with “HTTP” and “HTTPS”.

Those were the three most common reasons behind duplicate content issues found in websites. Now, let’s look at how you can overcome duplicate content issues on your website.

Duplicate Content Issues: Solutions

Since duplicate content is a common issue, there are a number of ways that you can fix them. In most cases, the primary action you are taking is telling the search engine which one of the two (or more) versions of the page is the correct one to index and rank.

To be able to fix duplicate content issues, you must first be aware about the number of instances of duplicate content on your website. This is where we will start:

1. Finding Duplicate Content Pages On Your Website

One of the easiest and most dependable ways to check whether or not your website is a victim of unintentional duplicate content is to check how many of its pages are indexed. If the number is higher than the number of pages that you have manually created, you have a content duplicacy problem.

You can do this in Google’s Search Console. If you don’t have an account in Google Search Console (I strongly recommend making one), you can also use the search engine itself.

Simply go to www.Google.com, enter “site:yourwebsitename.com” into the search bar, and hit enter.

The resulting SERP will have all of the pages of your website that have been indexed by the search engine. Once again, if this number is higher than the number of pages that you know you have created, treat it as a clear sign that there is a duplicate content problem on your website and move on to the next step.

You can also use different SEO tools to detect duplicate content. One such tool is Duplicate Content Checker by Sitechecker. This tool will save you time and help you analyze your content in a quality way.

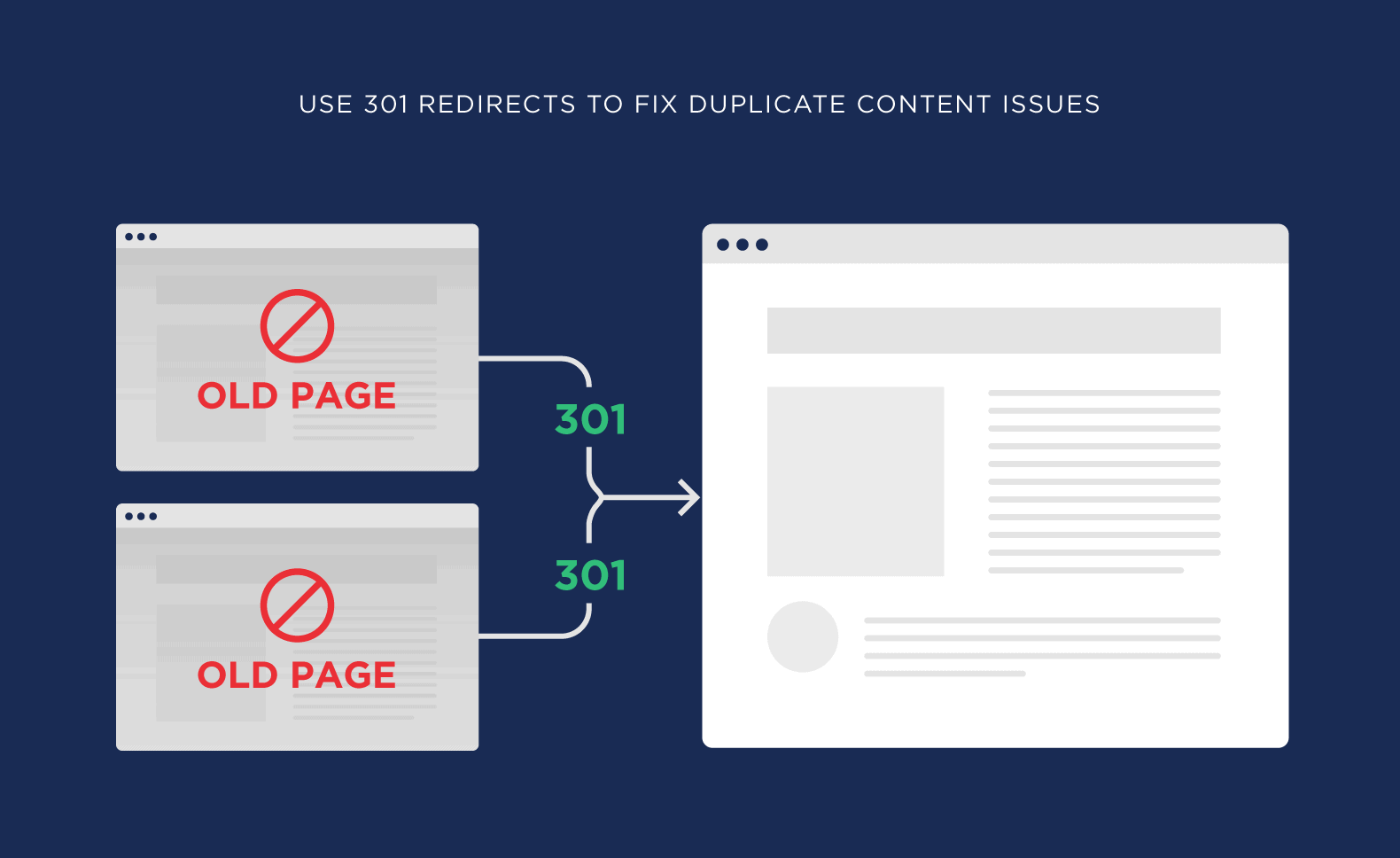

2. Apply 301 Redirects

As the name suggests, a 301 redirect sends users (and search engine crawlers) to a different page. So, if you have multiple versions of the same page indexed with a search engine, simply choose the “correct” version and implement 301 redirects to that version from all the other versions.

This way, every time a user or search engine crawler tries to access one of the duplicate versions, they will be automatically redirected to the correct version of the web page. Moreover, this will also allow for all the link metrics of all the duplicate pages to be passed to the correct version.

As a result, the correct page that you have specified here will be able to attain better visibility. In some cases, this practice may even result in an improved SEO performance by the “correct” page.

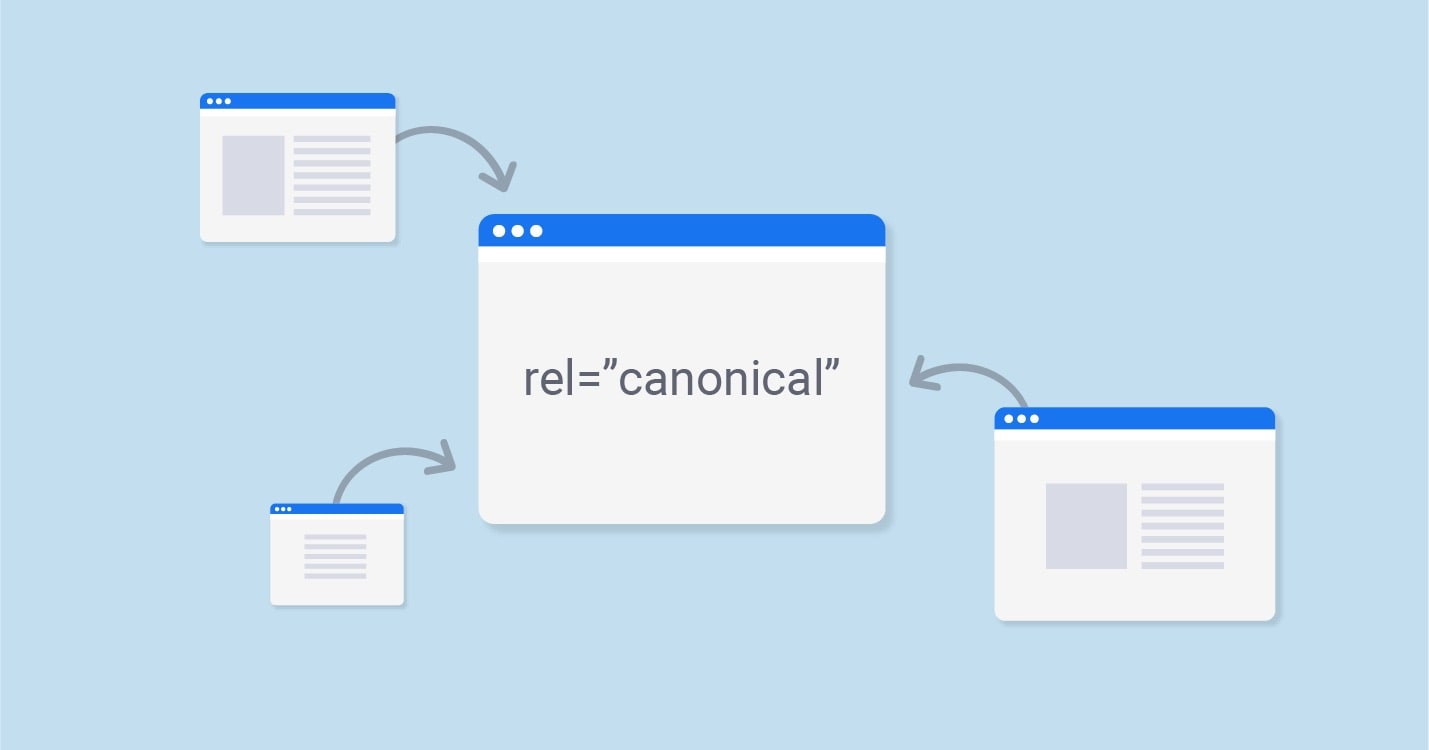

3. Implement A Rel=”canonical” Attribute

In simple words, implementing a rel=”canonical” attribute is a way of telling the search engine that the page is a copy of a specified URL (the “correct” version of that page). When a search engine crawler comes across a page with the rel=”canonical” tag, it automatically credits all of the link juice, the content, and other attributes that are important for search engines to the correct page.

The rel=”canonical” attribute is a HTML attribute that is added to the HTML head of duplicate pages, along with the URL of the correct or canonical page.

When you look at it from the perspective of the amount of link juice passed, the rel=”canonical” attribute works just as well as the 301 redirect. The only difference is that this attribute can be applied at the page level and thus requires less time to implement.

However, that may not be the case where you have thousands of duplicate pages, something that is commonly seen in ecommerce websites.

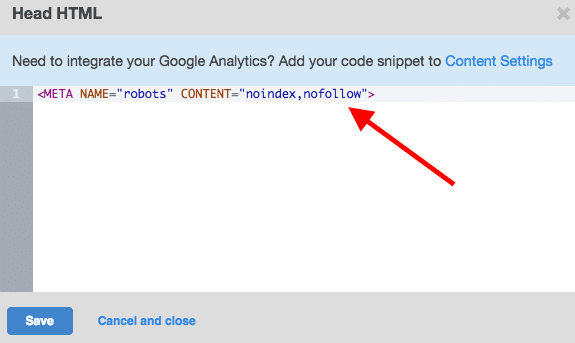

4. Implement The Meta Noindex, Follow Meta Tag

Meta no index, follow tag tells search engines that they should crawl a page but not index it. As the name suggests the tag is a HTML tag that is added to the head of the pages that contain duplicate content.

This solution is particularly great for duplicacy issues that are a result of instances of pagination.

So, why let Google crawl these pages when you are asking it to not index them? You have to do it because well, Google has asked webmasters to do this. In its guidelines page dedicated to duplicate content, Google has explicitly asked webmasters to not restrict crawl access to any of the pages on their websites.

5. Use Google Search Console To Highlight Your Preferred Domain

Google Search Console is a great tool. Among the countless amazing things webmasters can do with the search console, also lies a solution to duplicate content issues.

Using the Google Search Console, website owners have the option to set the preferred domain of their website. This is especially useful when you have different versions of your website (with or without the “www” prefix).

Similarly, you can also specify if Google's website crawlers should or should not crawl specific URL parameters with parameter handling.

While using Google Search Console may prove to be easier and more convenient in many cases, it is not always the best way to go about fixing duplicacy issues. This is because the changes you make in your Google Search Console will only take effect on Google’s search engine. Your website will still be facing the same issues on other search engines like Bing.

So, if you don’t depend on traffic from other search engines, using Google Search Console is a great idea.

Conclusion

Duplicate content issues can compromise the kind of results you can get from SEO. Since SEO involves a lot of hard work, a mistake that you unintentionally make should not get in the way of enjoying the fruits of your labor.

I hope that this article will help you gain a basic understanding of these issues and how you can go about solving them. If you have questions or doubts, feel free to drop them in the comment section below. I promise to be prompt and helpful with my replies.